| Vahid GarousiGarousiVahid Vahid |

a CSIT Home

> History of Information Theory

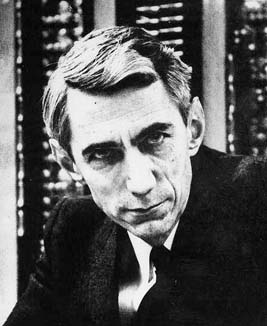

Claude Shannon

1916-2001

Look at a compact disc

under a microscope and you will see music

represented as a sequence of pits, or in

mathematical terms, as a sequence of 0's

and 1's, commonly referred to as bits.

The foundation of our Information Age is

this transformation of speech, audio,

images and video into digital content,

and the man who started the digital

revolution was Claude Shannon, who died

February 24, at the age of 84, after a

long struggle with Alzheimer's disease. Look at a compact disc

under a microscope and you will see music

represented as a sequence of pits, or in

mathematical terms, as a sequence of 0's

and 1's, commonly referred to as bits.

The foundation of our Information Age is

this transformation of speech, audio,

images and video into digital content,

and the man who started the digital

revolution was Claude Shannon, who died

February 24, at the age of 84, after a

long struggle with Alzheimer's disease.

Shannon arrived at the revolutionary idea

of digital representation by sampling the

information source at an appropriate

rate, and converting the samples to a bit

stream. He characterized the source by a

single number, the entropy, adapting a

term from statistical mechanics, to

quantify the information content of the

source. For English language text,

Shannon viewed entropy as a statistical

parameter that measured how much

information is produced on the average by

each letter. He also created coding

theory, by introducing redundancy into

the digital representation to protect

against corruption. If today you take a

compact disc in one hand, take a pair of

scissors in the other hand, and score the

disc along a radius from the center to

the edge, then you will find that the

disc still plays as if new.

Before Shannon,

it was commonly believed that the only

way of achieving arbitrarily small

probability of error in a communication

channel was to reduce the transmission

rate to zero. All this changed in 1948

with the publication of A Mathematical

Theory of Communication, where Shannon

characterized a channel by a single

parameter; the channel capacity, and

showed that it was possible to transmit

information at any rate below capacity

with an arbitrarily small probability of

error. His method of proof was to show

the existence of a single good code by

averaging over all possible codes. His

paper established fundamental limits on

the efficiency of communication over

noisy channels, and presented the

challenge of finding families of codes

that achieve capacity. The method of

random coding does not produce an

explicit example of a good code, and in

fact it has taken fifty years for coding

theorists to discover codes that come

close to these fundamental limits on

telephone line channels. Before Shannon,

it was commonly believed that the only

way of achieving arbitrarily small

probability of error in a communication

channel was to reduce the transmission

rate to zero. All this changed in 1948

with the publication of A Mathematical

Theory of Communication, where Shannon

characterized a channel by a single

parameter; the channel capacity, and

showed that it was possible to transmit

information at any rate below capacity

with an arbitrarily small probability of

error. His method of proof was to show

the existence of a single good code by

averaging over all possible codes. His

paper established fundamental limits on

the efficiency of communication over

noisy channels, and presented the

challenge of finding families of codes

that achieve capacity. The method of

random coding does not produce an

explicit example of a good code, and in

fact it has taken fifty years for coding

theorists to discover codes that come

close to these fundamental limits on

telephone line channels.

The importance of Shannon’s work was

recognized immediately. According to a

1953 issue of Fortune Magazine: "It

may be no  exaggeration to say that

man's progress in peace, and security in

war, depend more on fruitful applications

of information theory than on physical

demonstrations, either in bombs or in

power plants, that Einstein's famous

equation works". In fact his work

has become more important over time with

the advent of deep space communication,

wireless phones, high speed data

networks, the Internet, and products like

compact disc players, hard drives, and

high speed modems that make essential use

of coding and data compression to improve

speed and reliability. exaggeration to say that

man's progress in peace, and security in

war, depend more on fruitful applications

of information theory than on physical

demonstrations, either in bombs or in

power plants, that Einstein's famous

equation works". In fact his work

has become more important over time with

the advent of deep space communication,

wireless phones, high speed data

networks, the Internet, and products like

compact disc players, hard drives, and

high speed modems that make essential use

of coding and data compression to improve

speed and reliability.

Shannon grew up in Gaylord Michigan, and

began his education at the University of

Michigan, where he majored in both

Mathematics and Electrical Engineering.

As a graduate student at MIT, his

familiarity with both the mathematics of

Boolean Algebra and the practice of

circuit design produced what H.H.

Goldstine called: "one of the most

important master's theses ever written

... a landmark in that it changed circuit

design from an art to a science".

This thesis, A Symbolic Analysis of Relay

and Switching Circuits, written in 1936,

provided mathematical techniques for

building a network of switches and relays

to realize a specific logical function,

such as a combination lock. It won the

Alfred Noble Prize of the combined

engineering societies of the USA and is

fundamental in the design of digital

computers and integrated circuits.

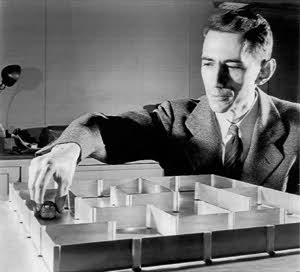

Shannon's

interest in circuit design was not purely

theoretical, for he also liked to build,

and his sense of play is evident in many

of his creations. In the 1950's, when

computers were given names like ENIAC

(Electronic Numerical Integrator and

Calculator) Shannon built a computer

called THROBAC I ( THrifty ROman-numeral

BAckward-looking Computer), which was

able to add, subtract, multiply and even

divide numbers up to 85 working only with

Roman numerals. His study in Winchester

Mass. was filled with such devices,

including a maze-solving mechanical mouse

and a miraculous juggling machine.

Traversing the ceiling was a rotating

chain, like those at dry cleaners, from

which were suspended the gowns from a

score of honorary doctorates. They made a

splendid sight flying around the room. Shannon's

interest in circuit design was not purely

theoretical, for he also liked to build,

and his sense of play is evident in many

of his creations. In the 1950's, when

computers were given names like ENIAC

(Electronic Numerical Integrator and

Calculator) Shannon built a computer

called THROBAC I ( THrifty ROman-numeral

BAckward-looking Computer), which was

able to add, subtract, multiply and even

divide numbers up to 85 working only with

Roman numerals. His study in Winchester

Mass. was filled with such devices,

including a maze-solving mechanical mouse

and a miraculous juggling machine.

Traversing the ceiling was a rotating

chain, like those at dry cleaners, from

which were suspended the gowns from a

score of honorary doctorates. They made a

splendid sight flying around the room.

Shannon's 1941 doctoral dissertation, on

the mathematical theory of genetics, is

not as well known as his master's thesis, and in fact was not

published until 1993, by which time most

of the results had been obtained

independently by others. After graduating

from MIT, Shannon spent a year at the

Institute for Advanced Study, and this is

the period where he began to develop his

theoretical framework that lead to his

1948 paper on communication in the

presence of noise. He joined Bell Labs in

1941, and remained there for 15 years,

after which he returned to MIT. During

World War II his work on encryption led

to the system used by Roosevelt and

Churchill for transoceanic conferences,

and inspired his pioneering work on the

mathematical theory of cryptography. and in fact was not

published until 1993, by which time most

of the results had been obtained

independently by others. After graduating

from MIT, Shannon spent a year at the

Institute for Advanced Study, and this is

the period where he began to develop his

theoretical framework that lead to his

1948 paper on communication in the

presence of noise. He joined Bell Labs in

1941, and remained there for 15 years,

after which he returned to MIT. During

World War II his work on encryption led

to the system used by Roosevelt and

Churchill for transoceanic conferences,

and inspired his pioneering work on the

mathematical theory of cryptography.

It was at Bell Labs that Shannon produced

the series of papers that transformed the

world, and that transformation continues

today. In 1948, Shannon was connecting

information theory and physics by

developing his new perspective on entropy

and its relation to the laws of

thermodynamics. That connection is

evolving today , as others explore the

implications of quantum computing, by

enlarging information theory to treat the

transmission and processing of quantum

states.

Shannon must rank

near the top of the list of the major

figures of Twentieth Century science,

though his name is relatively unknown to

the general public. His influence on

everyday life, which is already

tremendous, can only increase with the

passage of time. Shannon must rank

near the top of the list of the major

figures of Twentieth Century science,

though his name is relatively unknown to

the general public. His influence on

everyday life, which is already

tremendous, can only increase with the

passage of time.

Robert Calderbank and Neil J. A. Sloane |

|